Artificial intelligence is making plenty of headlines these days — and, in some cases, even writing them. Some concerns are valid, some are overblown, but as the global economy embraces the emerging technology, there’s no avoiding the larger conversation. There’s also no denying AI’s real-world potential. For every Sports Illustrated byline scandal or news story about the danger of self-driving cars, there’s an untold story of how AI research promises to change our world for the better, and a lot of that research is happening right here at the University of South Carolina.

A Better Assessment

Forest Agostinelli and Homayoun Valafar, College of Engineering and Computing

Susan Lessner, College of Engineering and Computing and School of Medicine Columbia

Fifteen-year-old Forest Agostinelli holds his breath. He has the first and second layers, but the Rubik’s Cube is still a patchwork of colors, and the last layer is the toughest. He turns the cube over in his hands, turns one face, then another, then — Aha! The last few moves become clear in his mind. He turns and turns and turns again. Reds switch places with blues, yellows give way to greens.

The last layer clicks into place.

Done.

As a kid, Agostinelli couldn’t resist puzzles — and that interest followed him into his academic career. While earning his Ph.D. at the University of California Irvine, he worked with colleagues to develop DeepCubeA, a program that made headlines in 2019 for teaching itself to solve the Rubik’s Cube.

Today, the assistant professor of computer science and engineering is still solving puzzles. Agostinelli, who joined the University of South Carolina in 2020, is one of the core faculty members of USC’s Artificial Intelligence Institute. His overarching research goal? To understand how people can use AI to discover new knowledge.

Case in point: One of his most recent projects looks at peripheral arterial disease, a condition in which fatty blockages in the legs become calcified, increasing the risk of heart attack or stroke. Right now, doctors must assess calcification with only the naked eye and prior experience since software that can analyze these scans does not yet exist. Agostinelli wonders if AI can change that. What if AI can be trained to detect calcification and identify dangerous calcification patterns? What if physicians can consider those findings, along with biomarkers in the patient’s blood, to improve preventive care?

“You’re making these really big decisions about what needs to be done, including whether

or not a limb needs to be amputated,” Agostinelli says. “Doing so without it being

necessary is catastrophic. Not doing

so when it was necessary is catastrophic. For a patient, they will feel more confident

if this decision is made based on more information than just eyeballing, which is

the best we can do right now.”

More than that, AI could even discover novel causes of high-risk peripheral arterial disease and lead to new prevention strategies.

Agostinelli’s team — computer science and engineering professor Homayoun Valafar and biomedical engineering associate professor Susan Lessner — has already gathered preliminary data about the patients, including their outcome and what biomarkers were identified in their blood work. From there, he will create a logic program that builds on that data to provide an objective calcification score.

And that approach is critical because other types of AI, while effective, can make mistakes. One small quirk or error in a neural network’s input, for example, can lead to wildly inaccurate output. More importantly, their decision-making happens in what scientists refer to as a “black box,” meaning users can’t see what went wrong. A logic program, on the other hand, generates explainable results — and engenders greater trust in the user.

Agostinelli envisions a future where AI is explainable, where users can lift the lid off the black box, weigh in with their own ideas and learn how to solve any number of problems, including those in chemical synthesis, theorem proving and robotics.

“Can humans and AI collaborate to solve these problems?” he asks. “I think this is the goal for a lot of people who work in AI. We’re trying to do the things that humans actually can’t do — and then augment what we can do to make us almost superhuman.”

Planting a Stake

Sourav Banerjee, College of Engineering and Computing

With nearly 25,000 farms and 4.8 million acres of farmland across South Carolina, it’s no surprise agribusiness is big business in the Palmetto State — with an annual economic impact of roughly $58.1 billion. The nation’s worsening farm labor shortage has a big impact, too. It’s one of the reasons grocery prices increased by more than 11 percent in 2022.

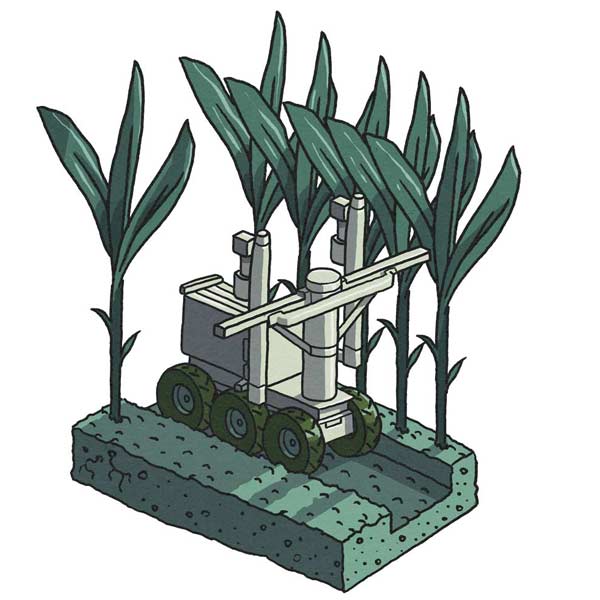

Mechanical engineering professor Sourav Banerjee believes AI-powered robots are a possible solution. He has secured funding from the South Carolina Department of Agriculture to build a prototype robot that can place and remove stakes in fields, a labor-intensive process that is necessary for bell peppers, tomatoes and other plants with weak stems.

An illustration of a prototype robot that can place and remove stakes in fields, a labor-intensive process that is necessary for bell peppers, tomatoes and other plants with weak stems.

Compared with some other sectors like health care, where AI has spurred rapid advancement, agriculture is behind the curve.

“If you see robotics in the medical field, researchers have been investigating it

for the last 20 years,

and already some companies have started to take advantage of it. That’s why they are

ahead in the game,” Banerjee says. “The agricultural field does not necessarily have

to be that sophisticated, but at the same time, this market has not been tapped at

all, and farmers desperately need help.”

StakeBot, the prototype Banerjee and his team have developed, is narrow enough to drive between rows of crops and is equipped with robotic arms for placing or removing stakes two at a time. A sensor on the front helps it navigate the terrain, and the robot has learned how to respond to various obstacles thanks to months of testing in the lab. Once field tests are complete, StakeBot will be ready for production should a company decide to take it to market.

Stakes are just the beginning. Banerjee says it would be feasible to develop a version that can plant seeds as well. His team is also developing a virtual model of a scaled-up robot capable of working six rows at a time instead of two. In the not-so-distant future, he envisions a world where similar technology can assess plant health and assist in poultry processing.

“Farming is challenging work to do, and to maximize their profit, farmers need help in South Carolina,” Banerjee says. “So many people complain about the price of food increasing but don’t think about the actual societal needs that we have. That’s what I feel I can contribute.”

Snap Decisions

Erfan Goharian, College of Engineering and Computing

In the city of Mashhad, Iran, where Erfan Goharian grew up, the summers were so dry that water rationing wasn’t uncommon. But when winter rolled around, the city would often experience heavy snow and rain — sometimes enough to cause dangerous flooding.

“Seeing both extremes and the problems associated with them pushed me toward water resources engineering,” says Goharian, now an assistant professor in USC’s College of Engineering and Computing.

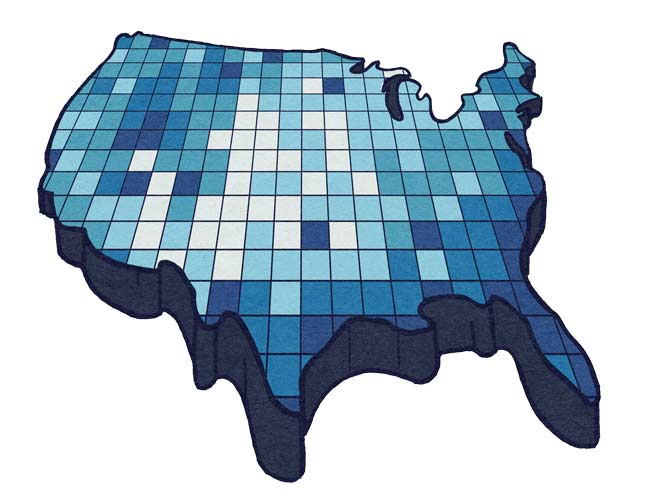

Since then, he has sought innovative ways to improve flood management. He recently received a prestigious National Science Foundation CAREER award, an NSF Smart and Connected Communities award, and other grants from the National Oceanic and Atmospheric Administration and U.S. Geological Survey to study how AI and novel technologies can be used in flood prediction and modeling. In addition to helping scientists better understand coastal and urban flooding, his findings will improve early warning systems and flood control infrastructure.

Erfan Goharian, an assistant professor in USC’s College of Engineering and Computing, recently received a prestigious National Science Foundation CAREER award to study how AI and novel technologies can be used in flood prediction and modeling.

Intense periods of flooding and droughts aren’t unique to Goharian’s hometown in northeastern Iran — he saw the phenomenon again while earning his Ph.D. and doing a postdoc in California. In fact, severe floods — the rare-but-statistically-possible ones that experts refer to as 100-year floods, 500-year floods, etc. — are increasing in frequency.

They are also difficult to predict. Factors including land development, climate change and aging or inadequate infrastructure mean no two weather events will behave the same.

“What we built might be designed 30 years ago and based on the rainfall that we saw and recorded back then, what we call a stationarity assumption,” he says. “We should design the new infrastructure based on non-stationarity. A 100-year storm 30 years ago is different from a 100-year storm nowadays.”

And the 100-year storms we can expect to see over the next 30 years, he adds, will be different from what we encounter today.

That’s why real-time data is so critical for effective flood management. But which data source is best? Satellites are capable of monitoring broad geographical areas but have to be in the right place at the right time. And sensors, while more responsive, have limited geographic ranges.

Goharian and his team believe camera-generated images and videos are a happy medium when it comes to gathering accurate data quickly. As part of two NSF projects, they are placing ground-based cameras in test areas in South Carolina and California. The resulting images will be combined with images from other sources, including drones and satellites. They will then be processed through deep learning networks that have been trained to detect pixels of water, providing valuable insights on the flood’s behavior and important hydrologic measurements.

“For example, if you detect water in a street, then we can tell you the depth of the water or the area that is inundated by water,” Goharian says. “In some specific cases, we can give you the velocity of water or the inundation rate, which are very important because they can tell us which direction the water is moving. And that tells us which streets we need to close before the water reaches them.”

Beyond improving modeling and forecasting, he is hopeful that his research will help decision-makers act quickly during crises. While living in northern California in 2017, he saw one such crisis unfold. Damage to the Oroville Dam spillway led to large-scale evacuations in communities downstream as officials scrambled to avoid catastrophic flooding. In situations like these, AI can serve as a policy optimization framework, allowing users to consult models that have been trained on data from millions of potential scenarios.

“We have lots of data, and we need to consider and analyze all of it during a short

period to make the best decision,” he says. “Humans usually just look at a few factors,

but the machine can help us analyze much more data in a short time to make a better

decision. These decisions can be designed beforehand and translated into optimal decision

trees which tell us the best thing to do when something happens,

and in real-time decision-making, that helps us a lot.”

Responding to Stress

Nick Boltin, College of Engineering and Computing

It’s Friday night, and the local emergency room is swarming. Physicians and nurses move from patient to patient, and the paramedics keep rolling in more. There’s a woman with a suspected heart attack. There’s a man injured in a car accident. There’s a small child struggling to breathe. And then there’s the waiting area.

The physicians appear to maintain their cool amid the chaos. But are they really OK? Could high-stress, traumatic events during ER shifts eventually lead to burnout or other mental health issues?

Biomedical engineering instructor Nick Boltin believes the answers to questions like these can be found by monitoring brainwaves. And with some help from AI, doctors could even receive real-time insights and recommendations to better cope with these events.

But there is no formula for predicting which experiences will register as traumatic.

“Some clinicians may think a car accident is extremely traumatic,” Boltin says. “Some may have trouble with seeing babies or infants come into the hospital. Some have even told us that just having to log everything into the computer is the most stressful and traumatic thing for them. It really is personalized to each individual. And when they’re having those moments, we can’t see what’s happening on the inside.”

High-stress, traumatic events during ER shifts could eventually lead to burnout or other mental health issues for physicians. Biomedical engineering instructor Nick Boltin believes insight can be found by monitoring brainwaves. And with some help from AI, doctors could even receive real-time insights and recommendations to better cope with these events.

Boltin has partnered with Greenville-based bio-neuroinformatics company Cogito to test a prototype EEG device designed to detect acute stress reactions. The team has also enlisted students, including some from USC’s biomedical engineering program.

“I’ve been able to take undergraduates, train them up on how to collect data, how to use these devices, and actually put them in the emergency department so they stand side by side with these clinicians as they go through their daily routines,” he says. “The students absolutely love it. They’ve been fascinated by getting real-world experience.”

Thanks to grant funding from SC EPSCoR and the Health Sciences Center at Prisma Health, the team has gathered data from a cohort of emergency department clinicians at Greenville Memorial Hospital, a level one trauma center.

How does the device work?

An electrode is embedded in a surgical cap so it can sit discreetly on the doctor’s forehead. Brainwave data is relayed to a belt pack. From there, the data can be fed through an AI-powered decision support system, which Boltin and his team are working to develop.

“The idea is that, maybe for one event, the peak frequencies weren’t quite as high, maybe the intensity wasn’t quite as much,” he says. “But over a prolonged period of time, if multiple traumatic events add up over a shift, this is where the decision support comes in. We can make recommendations to that clinician. ‘Hey, maybe you need to take a break. Maybe you need to sit down, relax, go meditate.’”

It is estimated that emergency physicians experience PTSD at a rate four times that of the general population. Boltin’s hope is that the device will allow for the detection of acute stress disorder, a precursor to PTSD, opening the door for intervention and prevention. Long term, there are also applications for military members and workers in other high-stress industries.

“Provider wellness is a crucial and significant component of health care delivery and the way that our health system actually performs,” Boltin says. “By doing this project and taking an approach to characterize and monitor these neurological biomarkers, we can help prevent these providers from getting burnout.”

Reconstructing the Past

Matt Simmons, College of Arts and Sciences | Story by Bryan Gentry

Nash Deason compares the image of a handwritten probate record with a typed transcript created by an AI program. When the senior history major and Institute for Southern Studies student worker finds a mistake, he types a response.

The data in these records could aid genealogists tracing family trees and help historians reconstruct a picture of the past. But without a convenient way to search for the treasures embedded in the cursive handwriting, a researcher must turn pages and read them line by line. AI is making those treasures a bit easier to find.

Eventually, the AI program will take note of Deason’s corrections so it can decipher the next document more accurately.

“A mature algorithm will be able to read these documents with a high level of accuracy,” says Matt Simmons, Institute for Southern Studies assistant director.

The institute recently created the Southern History Archives Research and Education

Consortium. SHARE is a

network of historians committed to creating digital, searchable copies of massive

archive sets. Staff members from University Libraries helped the institute adapt Amazon’s

text-reading software to produce the transcripts.

“This will allow us, along with our SHARE partner institutions, to process documents quickly,” Simmons says. “By avoiding the tedious work of human-produced transcriptions, SHARE will be able to create a massive database of primary-source records in a timely fashion.”

SHARE has started digitizing probate records in the South Carolina Department of Archives and History. These documents list the items that were owned by a person at death and listed in a will, making them a rich source of information about people, material culture, economics and more.

Meanwhile, the work of Deason and other researchers will continue to train the algorithm. It will then take several years to finish digitizing those records and publish them online. After that, the institute hopes to partner with other universities to unearth more historical treasures in archives throughout the South.

“In time,” Simmons says, “we want SHARE to be the most complete and accessible collection of primary-source documents related to the South before 1900 available anywhere.”